A group of researchers is using artificial intelligence (AI) techniques to calibrate some of NASA’s images of the Sun, helping improve the data that scientists use for solar research.

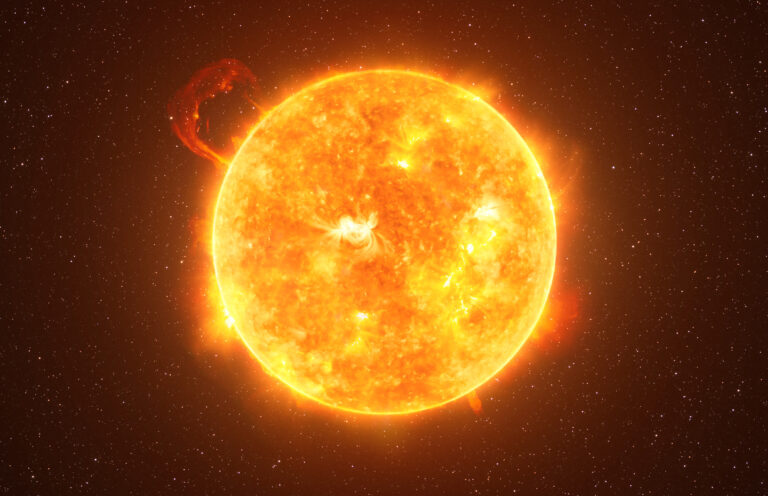

Launched in 2010, NASA’s Solar Dynamics Observatory (SDO) has provided high-definition images of the Sun for over a decade. Its images have given scientists a detailed look at various solar phenomena that can spark space weather and affect astronauts and technology on Earth and in space.

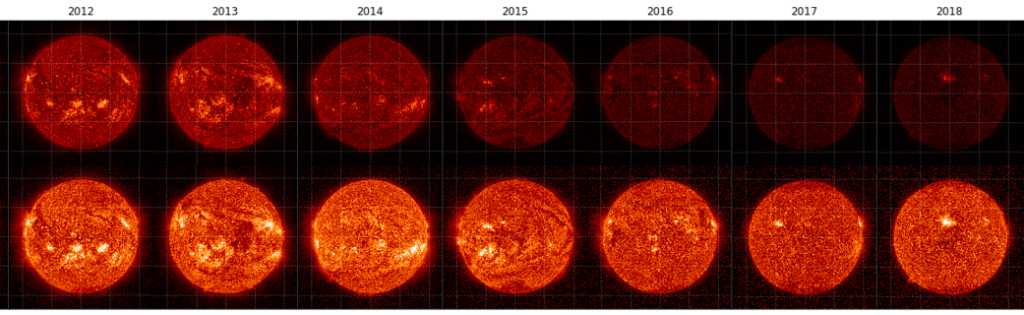

The Atmospheric Imagery Assembly (AIA) is one of two imaging instruments on SDO and looks constantly at the Sun, taking images across 10 wavelengths of ultraviolet light every 12 seconds. This creates a wealth of information of the Sun, but AIA degrades over time, and the data needs to be frequently calibrated.

Since SDO’s launch, scientists have used sounding rockets to calibrate AIA. For calibration, scientists attach an ultraviolet telescope to a sounding rocket and compare that data to the measurements from AIA. Scientists can then make adjustments to account for any changes in AIA’s data.

There are some drawbacks to the sounding rocket method of calibration. Sounding rockets can only launch so often, but AIA is constantly looking at the Sun. That means there’s downtime where the calibration is slightly off in between each sounding rocket calibration.

“It’s also important for deep space missions, which won’t have the option of sounding rocket calibration,” said Dr Luiz Dos Santos, a solar physicist at NASA’s Goddard Space Flight Center. “We’re tackling two problems at once.”

With these challenges in mind, scientists decided to look at how machine learning could calibrate the instrument, with an eye toward constant calibration.

First, researchers needed to train a machine learning algorithm to recognize solar structures and how to compare them using AIA data. To do this, they give the algorithm images from sounding rocket calibration flights and tell it the correct amount of calibration they need. After enough of these examples, they give the algorithm similar images and see if it would identify the correct calibration needed. With enough data, the algorithm learns to identify how much calibration is needed for each image.

Because AIA looks at the Sun in multiple wavelengths of light, researchers can also use the algorithm to compare specific structures across the wavelengths and strengthen its assessments.

To start, they would teach the algorithm what a solar flare looked like by showing it solar flares across all of AIA’s wavelengths until it recognized solar flares in all different types of light. Once the program can recognize a solar flare without any degradation, the algorithm can then determine how much degradation is affecting AIA’s current images and how much calibration is needed for each.

“This was the big thing,” Dos Santos added. “Instead of just identifying it on the same wavelength, we’re identifying structures across the wavelengths.”

This means researchers can be sure of the calibration the algorithm identified. Indeed, when comparing their virtual calibration data to the sounding rocket calibration data, the machine learning program was spot on.

With this new process, researchers are poised to constantly calibrate AIA’s images between calibration rocket flights, improving the accuracy of SDO’s data for researchers.